One of the features of Mac OS X that has intrigued me was the Summarize service that has the ability to generate a summary of a larger piece of text. In more recent versions of the OS this needs to be switched on in the

System Preferences. This is an example of a technique called

Automatic Summarization. I thought it might be fun to try and perform a similar task in Swift. This is not an attempt to perfectly recreate the exact logic Apple used, but instead how similar results can be achieved with some basic

NLP processes.

While there are many ways to achieve this goal, to keep it simple I'll use an approach called document summarization. That builds a summary from the

k most important sentences within the text without modifying them, where

k is an input variable of the user's choosing for controlling the length of the summary. This works on the principal that a few key sentences can get an overview of the text as a whole. Ok, so how do we know which are the most important sentences? Well based on the observation that the most important topics of an article are often the most repeated, we can build a dictionary of the word frequencies (a count of how many times that word appeared within the article text) and from that we can compute a ranking score for each sentence by adding up the frequencies of the words the sentence contains. Finally we can string together the

k highest scoring sentences to form our summary.

Let’s take this one step at a time using this article on

recent US policy changes to the ISS from the Washington Post as an example. The first step is computing the word frequencies. To do this we need to break up the text into the individual words. Fortunately, there is a Foundation class to do just that,

NSLinguisticTagger which will enumerate all the tokens in a string. Tokens can be words, punctuation or whitespace. However for our purposes we are only interested in the words, so we specify the options omitWhitespace and omitPunctuation to only deal with the words. The joinName option is used to combine names that have multiple words in to a single token, such as treating New York as a single token rather than two separate tokens.

The following table lists the 10 most frequent words found in the article.

| Word |

Frequency |

| the |

66 |

| to |

33 |

| of |

24 |

| and |

18 |

| station |

15 |

| that |

15 |

| a |

13 |

| in |

12 |

| said |

11 |

| it |

10 |

There is a problem here. The table is dominated by words that are unimportant to the meaning of the the article. Languages like English often have many words like "the" and "a" which are important for the grammar of a sentence, but are not important to picking out the main themes of an article. If left in the word frequency values then they would dominate the rankings, skewing the score of sentences. Such words are called

stop words and we can filter them out. There is no standard list of stop words, so I will use

Google's stop word list for English. Let’s update the approach to filter out the stop words from being counted in the word frequencies. We store the stop words as a Set of Strings and check for a given word that it is not contained in the set before incrementing the word frequency.

The following table lists the 10 most frequent non-stop words in the article.

| Word |

Frequency |

| station |

15 |

| nasa |

10 |

| commercial |

8 |

| space |

7 |

| iss |

6 |

| private |

6 |

| will |

5 |

| international |

4 |

| document |

4 |

| transition |

4 |

This looks much better with more of the words representing important terms and themes in the article. Next we need to identify the sentences, here again

NSLinguisticTagger comes to the rescue. In addition to receiving the token range, the closure passed to enumerateTags also gets passed the range of the containing sentence. We can track the sentences as we enumerate the tokens.

Then we can compute the ranking score for each sentence by summing the word frequencies of each word in the sentence.

The following table lists the 3 highest ranked sentences for the article.

| Sentence |

Ranking |

Index |

| Last

month, as reports circulated about NASA pulling the plug on the station, Mark

Mulqueen, Boeing’s space station program manager, said “walking away from the

International Space Station now would be a mistake, threatening American

leadership and hurting the commercial market as well as the scientific

community.” |

80 |

20 |

| The

transition of the station would mark another bold step for NASA in turning

over access to what’s known as low Earth orbit to the private sector so that

the space agency could focus its resources on exploring deep space. |

72 |

23 |

| In its

budget request, to be released Monday, the administration would request $150

million in fiscal year 2019, with more in additional years “to enable the

development and maturation of commercial entities and capabilities which will

ensure that commercial successors to the ISS — potentially including elements

of the ISS — are operational when they are needed.” |

72 |

7 |

Having found our most important sentences we string the

k most important sentences together in the order they appeared within the original text. It is important to keep the original order of the sentences when reforming the summary. Otherwise the result can look disjointed.

For our example article and for a value of

k equal to 3, the following summary is generated.

In its budget request, to be released Monday, the administration would request $150 million in fiscal year 2019, with more in additional years “to enable the development and maturation of commercial entities and capabilities which will ensure that commercial successors to the ISS — potentially including elements of the ISS — are operational when they are needed.” Last month, as reports circulated about NASA pulling the plug on the station, Mark Mulqueen, Boeing’s space station program manager, said “walking away from the International Space Station now would be a mistake, threatening American leadership and hurting the commercial market as well as the scientific community.” The transition of the station would mark another bold step for NASA in turning over access to what’s known as low Earth orbit to the private sector so that the space agency could focus its resources on exploring deep space.

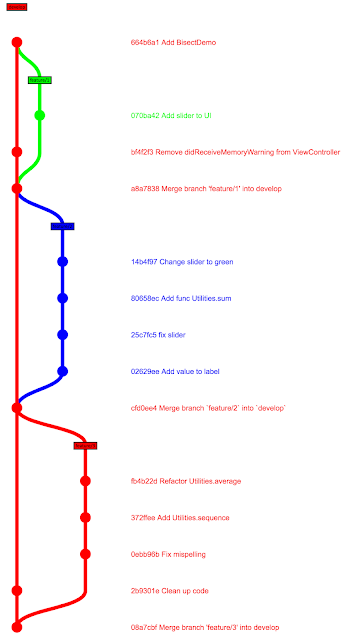

The full source for this post can be found

on Github as a swift playground. There are many methods of performing automatic summarization, this approach was chosen primarily on simplicity, however there are many other approaches that can give more optimal results.